Machines that can improvise

Computers are already capable of making independent decisions in familiar situations. But can they also apply knowledge to new facts? Mark Winands, the new professor of Machine Reasoning at the Department of Advanced Computing Sciences, develops computer programs that behave as rational agents. This means they can independently think through the consequences of a decision—yet another leap forward in artificial intelligence (AI). But Winands has no time for doomsday predictions on how AI is set to outstrip humans. “Generic reasoning systems are laughably bad.”

In conversation, it is immediately evident that he is accustomed to explaining complex issues. What he wants computers to learn is similar to what people usually do, Winands says. Suppose you have to respond to an unforeseen circumstance with unknown consequences. How do you make the right decision and take an adequate course of action? We humans improvise. “My intuition suggests a, but I’m not sure. Let’s think about that for a moment; maybe alternatives b or c have more acceptable consequences. So you’re thinking about possible follow-up moves and what counteractions you can expect.”

Winands points to a pilot’s emergency landing on the Hudson River as a good example of an intuitive decision. Faced with an unfamiliar situation, the pilot had limited information and 30 seconds in which to weigh up myriad considerations. With the benefit of hindsight, one can always question whether it was the optimal decision. Under the circumstances, however, it was more than adequate. “Neither people nor computers have the capacity to consider every possible angle and reason everything out in advance. The point is to find an acceptable solution as quickly as possible, with limited information and computing power.” This is the crux of the field of machine reasoning.

Mark Winands is professor of Machine Reasoning at the Department of Advanced Computing Sciences (DACS) at Maastricht University. His research focuses on machine reasoning and automated decision-making processes. He is best known for his contribution to the development of the Monte Carlo Tree Search reasoning method. He has been head of DACS since 2021.

Friday 16 December at 16:30, Winands will deliver his inaugural speech "The Intelligent, the Artificial, and the Random". Join the livestream.

Board games

How do you teach a machine to improvise? Faced with a particularly thorny question, Winands will turn to his computer to play board games like chess or Risk. Not because he’s a gamer, but because they serve as a testing ground. “I focus on techniques to make better and faster decisions. Can I come up with a plan that’s better or faster than other AI systems or humans?” Machine reasoning is a challenge even in the context of board games, which are played in a controlled environment. “You can think a few moves ahead, but then the game explodes into a vast number of possibilities. The opponent knows more than you, the role of randomness and chance increases. Try coming up with solid reasoning then.” The board games are puzzles—as are planning and automation problems.

However hard it may be, machines that can make reasoned decisions are already in operation. One example, developed at Maastricht University, is an intelligent computer system involved in the production of shower heads and water taps in a factory. Winands sketches on a whiteboard how the system keeps several production lines running and adjusts the production plan to changing circumstances. Another successful ‘Maastricht’ example is the use of machine reasoning in the design of building layouts. “The AI system can calculate many scenarios based on a user’s wishes. Where do I place load-bearing walls and supports? What different layouts are possible while maintaining building safety? The design is completely automated.”

Neither people nor computers have the capacity to consider every possible angle and reason everything out in advance. The point is to find an acceptable solution as quickly as possible, with limited information and computing power.

Child benefits scandal

How could the recent benefits scandal—in which the Dutch tax office wrongly accused thousands of Dutch parents of making fraudulent benefit claims—have turned out differently? In Winands’s view, a machine-reasoning method could have prevented a great deal of misery. Benefit claims were assessed using machine learning. The AI system was trained to recognise certain patterns as fraud, so when it encountered new, comparable cases, it naturally labelled them as fraud too. “If the system also had the capacity for reasoning, things could have been different. Hold on, this may look like a fraud pattern, but on second thoughts, it could also be something else. Machine reasoning offers a better way of justifying why one person receives a benefit and another is labelled a fraudster.” He emphasises the ethical aspects of AI. “It’s essential to develop systems that take account of our norms and values.”

Generic reasoning methods

A key challenge for the future is the development of generic or non-specific AI systems that can solve multiple types of problems. These systems would have the science-fiction-like capacity to learn and perform optimally any intellectual task a human can perform. We’re not there yet, Winands says. “AI systems can usually only do one thing well: translate a text, make a diagnosis. Ideally, you want a system that can also be used outside a specialised domain. But even something as simple as a system that plays several board games well is extremely difficult to develop. A generic AI system that can do many things at once will always perform less well—just as people do.”

Absolute limit

Reassuringly, Winands has his doubts that AI will ever surpass human intelligence. “What occasionally irritates me is the idea that AI can do everything. It can beat us at chess, translate texts, scan pictures, make diagnoses, you name it. People seem to think it’s an improvement of the human intellect. But a generic system that can play various board games does so laughably badly. Appalling, really. Perhaps human intelligence is an absolute limit that AI can never surpass. That’s the positive message I like to convey.

Perhaps human intelligence is an absolute limit that AI can never surpass. That’s the positive message I like to convey.

By: Hans van Vinkeveen (text), Arjen Schmitz (photography)

Also read

-

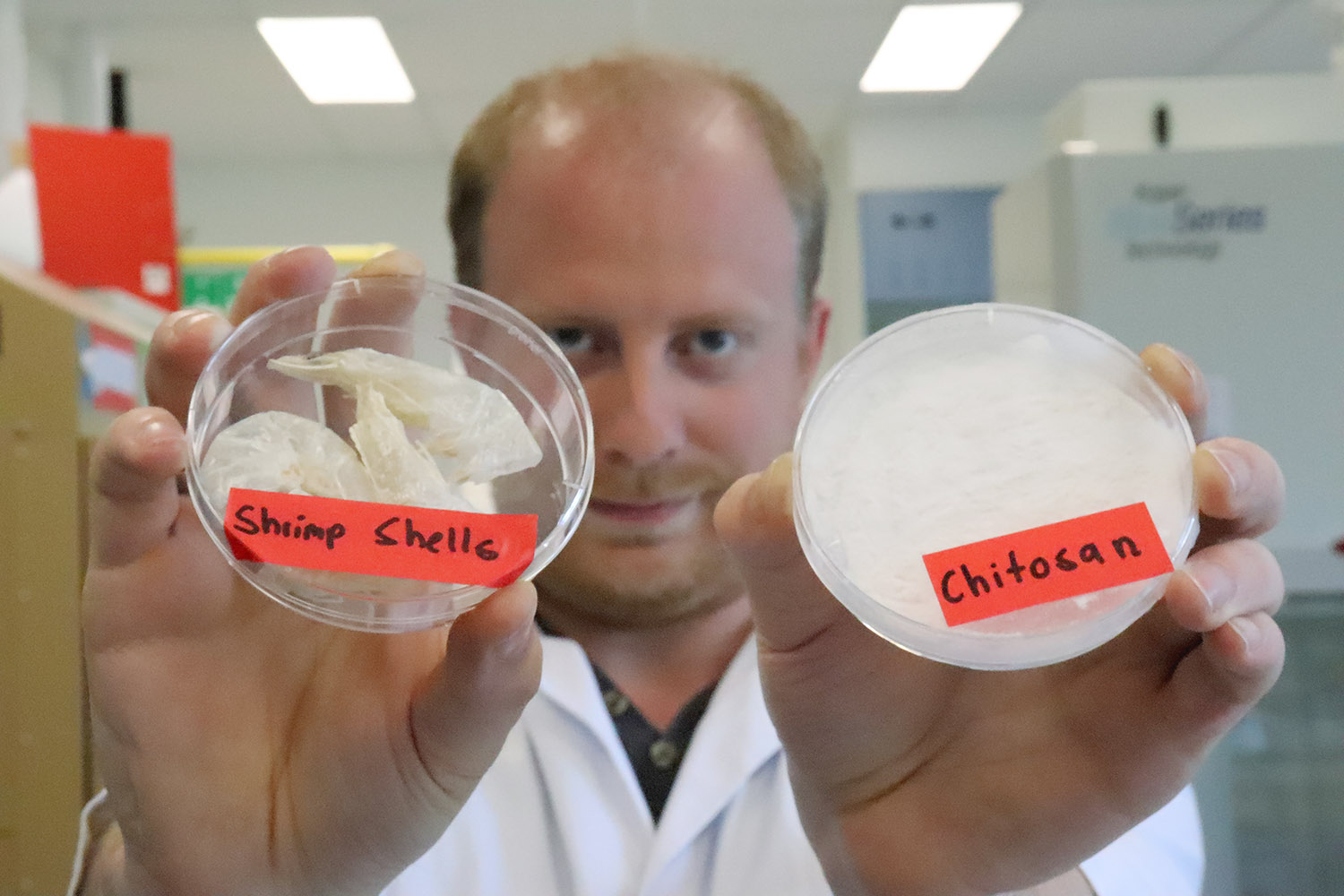

If we were to replace plastic with paper or glass, would the environment benefit? Surprisingly, no, says professor of Circular Plastics Kim Ragaert. She is calling for an alternative approach aimed at increasing awareness of and knowledge about recycling.

-

Eighty years ago, DSM opened its central laboratory for fundamental research in Geleen. Now the old lab is part of the Brightlands Chemelot Campus. This coming fall, the Festival Feel the Chemistry will look back on eighty years of innovation and will also look ahead to the future. Maastricht...