Measuring emotions from your smartphone?

Nearly everyone has a smartphone nowadays, and most people also have several other devices (tablets, laptops, etc.) to connect to the internet. Did you know that with just a simple smartphone or laptops companies and universities can already predict what kind of emotions you are experiencing?

In a recent Innovative Pedagogy report by the Open University UK and Stanford University, Sharples and colleagues (2015) argued that emotions, attention and engagement are key drivers for learning. When you decide whether to get out of bed, read a complex article, or complete a challenging task, how you feel about performing these activities will determine whether you will do them, persist and, more importantly, enjoy them.

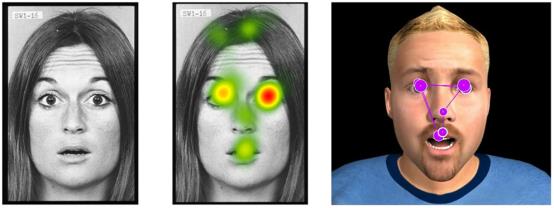

By combining eye-tracking movements (the way a viewer’s eyes focus on particular elements in a video or text) with facial expressions (such as amazement, or a shake of the head) and posture (leaning backwards or forwards) on a smart phone, several companies can already track, trace and predict how people react to particular advertisements, where their attention is drawn, whether they like or dislike a particular scene, and whether they associate the brand with particular emotions.

In the field of the learning sciences, researchers are on the brink of a similar breakthrough. Techniques for tracking eye movements, emotions and engagement have matured over the past decade. Combining a mature discipline with relatively inexpensive ways to measure where learners watch and click in learning materials will allow researchers, teachers and learners to discover where, when, and how people learn.

By combining people’s smart phone or iPad behaviour with the areas where they focus their gaze, rich opportunities for personalised learning could become available. For example, if the analytics indicate that a student is rapidly skimming text, she may already be already familiar with key concepts and be getting bored, so an engaging exercise might pop up, or a chat opportunity to discuss a complex problem with a peer. Thus, analytics of emotions can work alongside adaptive teaching to offer a more personalised learning experience.

While there may be valuable opportunities to link eye-tracking and emotion data with learning behaviour data, learners will only be willing to share eye movements, facial expressions and posture data with others if they perceive positive benefits. One such benefit would be that the analytic tools are able to identify learners’ emotions correctly and then provide appropriate teaching and useful feedback. Even as the practical problems are solved, complex ethical and privacy challenges will need to be addressed, if learning providers wish to monitor students’ emotional reactions. They will need to develop trust with learners and educators by providing accurate, adaptive learning solutions based upon actual learning emotions and needs.

Resources

Test your own emotional reactions to advertising videos: www.affectiva.com/technology/

For a recent debate on how emotions influence learning by Dr Bart Rienties (former UM alumni) and colleagues, see http://livestream.com/ou-connections/openminds-talks

Sharples, M., Adams, A., Alozie, N., Ferguson, R., FitzGerald, E., Gaved, M., McAndrew, P., Means, B., Remold, J., Rienties, B., Roschelle, J., Vogt, K., Whitelock, D. & Yarnall, L. (2015). Innovating Pedagogy 2015: Open University Innovation Report 4. Milton Keynes: The Open University.

This blog was written by Bart Rienties, who graduated in 2000 with a MSc. in International Economic Studies. In addition, he graduated with a PhD in Educational Psychology in 2010 from Maastricht University.

Other blogs:

Also read

-

In this article I discuss several differences between startups and starting businesses. I find this important because over the last year, I’ve gotten increasingly frustrated about the types of questions I (as a startup founder) would get. “Can you already live from your startup?” “Why is raising...

-

As a family therapist in an ambulatory setting, I see varying psychiatric disorders in multiproblem families. I specifically work with families who not only suffer from any kind of disorders, but also are affected by mild intellectual disability. This blog encompasses two parts. First, it will give...

-

I had a lot of fun at the EU Studies Fair. For me it proved a very fruitful event for both students and professionals who are trying to get a foothold in that lions’ den that I call “Eurobubble jobs.” In my experience this can be quite a daunting challenge, but if it has been a journey that I think...